BlindVision

What is it?

We are all aware of the struggles in the daily lives of the visually impaired (VI). For them, simple tasks such as walking out a door, climbing a flight of stairs, etc., are challenging tasks. Technology has come a long way, yet there aren’t many affordable navigation solutions for the blind.

The goal was to give an intuitive sense of direction to the VI. A 3D sound played from the point where this VI person wants to go, would provide a very intuitive sense of direction. The VI can do a course correction and will know how far the destination is depending on which side the sound is coming from and the loudness of the sound respectively.

During my undergraduate studies at Amrita, I worked on this project and published a paper on it.

Implementation

The two primary objectives were the following,

- Build a cost-effective solution

- Efficiently map the surrounding with depth information

We experimented with ultrasonic sensors as a way to map the depth information, and we soon found out that the range and the accuracy were not up to our expectations. Better ultrasonic sensors do exist, but they usually cost a lot more.

After experimenting with a couple of other solutions such as IR, we finally landed on stereoscopic vision. We knew we had struck gold because it proved to be reasonably accurate, and had the range that we wanted. In retrospect, I must admit we were very inclined to stereo-vision as we thought it mimicked human perception to some extent when compared to other solutions.

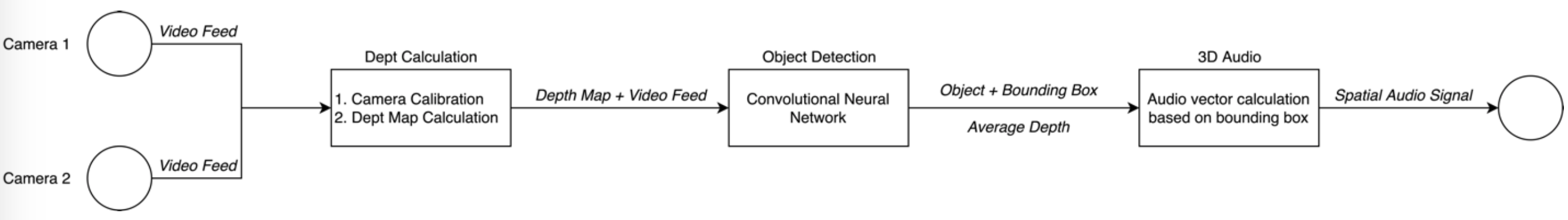

Now that we solved the depth estimation, the next task was to figure out a way to guide the VI person, i.e., figure out how to indicate to the VI whether the destination was to the left or the right. The apparent solution was object detection to identify the destination in the surrounding. We used the bounding box generated by the detector to calculate how far off from the centre of the image the object was. This “delta” was used to generate the left/right offset of the object from the VI person’s perspective.

After we had found solutions to these problems, we now had three coordinates for the destination, X (how far to the left/right), Y (how far above/below), and Z (depth). Using these three coordinates, we can now convey the location information to the user. Using OpenAL, we mapped these coordinates to another set of coordinates supported by OpenAL. Now, OpenAL would be able to play a 3D (spatial) audio signal from that point.

This pipeline was deployed on a pair of Raspberry Pis. They were put into an enclosure we made out of cardboard and worn around the head. A prototype of this is shown below,

Tools Used

Software

- Python3

- OpenAL

- TensorFlow

- tflearn

Hardware

- 2x Raspberry Pi

- 2x Pi Camera

- A pair of earphones

The methodology was formalized and was published in Procedia Computer Science. Check out the publications here.

Demo

Although the device has come a long way since, here is a video demonstrating a very early version of the system. We used this demo for our submission for the Smart India Hackathon, where we unfortunately didn’t get selected (long story).